What If False Beliefs Make You Happy?

The Catch-22 of beneficial illusions

Suppose a genie offered to get rid of all your false beliefs and replace them with true ones. Which false beliefs? Well, you don’t know yet, obviously, but it’s safe to assume that there must be some. None of us is perfectly rational, after all, and we are all susceptible to misjudgement, bias and self-deception. So suppose you could magically erase all your illusions: about the universe, your own self, your loved ones. Finally to know the truth and nothing but the truth about everything! Would you take the offer?

Most people wouldn’t. A life of complete truthfulness, they believe, would just be bleak and depressing. In the Book of Ecclesiastes (1:18) we read that too much knowledge can be a heavy burden: “For with much wisdom comes much sorrow; the more knowledge, the more grief.” Similarly, in his famous novel In Search of Lost Time, Marcel Proust wrote that “we are all of us obliged, if we are to make reality endurable, to nurse a few little follies in ourselves.”

Notice the qualifiers in both quotes. Some wisdom may be good for you, but “much wisdom” is a curse. Being totally deluded about reality is probably a bad idea, but who could object against some “little follies”, a few judicious falsehoods to sugar-coat the harshness of reality? In its full weight, Proust and the author of Ecclesiastes concur, the yoke of truth is simply unbearable.

Other people have expressed a more cheerful view of reality and its woes. In Woody Allen’s Annie Hall, little Alvie is plunged into depression after reading in a magazine that the universe is expanding and all matter will eventually decay. Listless and no longer interested in his school work, he is whisked off to a psychiatrist. But after the shrink is told about Alvie’s condition, his mother remonstrates with him: “What has the universe got to do with it? You’re here in Brooklyn! Brooklyn is not expanding!” With similar down-to-earth insouciance, the Greek philosopher Epicurus shrugged at his own mortality: “Death does not concern us, because as long as we exist, death is not here. And when it does come, we no longer exist.” What’s to worry about?

You could adopt the same attitude with respect to some inconvenient truths about ourselves. Of course we all have our shortcomings, foibles and personal embarrassments. But are we really such frail creatures that we cannot look at ourselves in the mirror without donning rose-tinted spectacles? Of course, life is quite absurd and death’s the final word, as Eric Idle sang in Life of Brian, but who cares? Why not look on the bright side of life?

Let’s assume, for the sake of argument, that Marcel Proust was right: we all need some “little follies” to make life bearable. What sort of misbeliefs should we indulge in then? Not just any old falsehoods, you reckon. It goes without saying that we don’t want foolish, crazy or dangerous misbeliefs running amok in our heads. If we are going to stray from the path of truth, we have to tread carefully, or things can get tricky. We must embrace misbeliefs that are both wholesome for body and spirit – or perhaps for society at large – and free from dangerous side-effects.

Some psychologists champion what they call ‘positive illusions’, mild misapprehensions about ourselves that are conducive to health and happiness: the illusion that we are more attractive, talented and intelligent than other people, that we have more control over our lives than is really the case, and that no bad things will happen to us in the future. Some misbeliefs about the universe at large may also yield benefits to individual believers or to society. A prime candidate, of course, is religion. Even some atheists agree with the philosopher Voltaire that, if God did not exist, it would be necessary to invent Him. Scholars of religion have argued that belief in God may be a biological or cultural adaptation, which evolved to solve the problem of large-scale cooperation, or just to soothe our existential anxieties.

In a similar vein, some philosophers have suggested that contra-causal free will, even if it doesn’t exist, is an indispensable fiction without which our societies would break down. In fact, one can dream up psychological or social benefits for just about every form of irrationality, superstition or pseudoscience. Believers in homeopathy may get better through the placebo effect, conspiracy theorists may benefit from a sense of meaning in a chaotic world, and those who believe in astrology may experience the universe as a cozier and more comfortable place to live in.

Imagine that, after some diligent searching and weighing of different options, we conclude that some of these false beliefs would indeed be beneficial for us, and do not carry significant risks. Suppose that I conclude that the belief in an afterlife – a pleasant one, that is – would make me a happier person. Or the belief that I will never be afflicted with any life-threatening disease, or the belief that I’m tremendously talented, intelligent, funny—and modest besides. Such delightful notions! Now that I’ve done the hard work and weighed the costs and benefits of different follies, it’s time to embrace the most salubrious ones. I decide, through sheer willpower, to believe!

But of course, it doesn’t work like that. You can’t just flip a switch in your brain and decide to believe that our souls will survive the death of our bodies, or that you are a wonderful violinist. Even if, as a matter of fact, a certain belief would make me perfectly happy, I’d still be incapable of simply making the requisite leap of faith.

Does that mean that we can’t entertain some pleasant falsehoods? Not quite. It’s just that you can’t choose your own misbeliefs, and once you have fallen under their spell, you can no longer identify them. It’s easy enough to see through someone else’s self-deception, but it’s a lot trickier to direct your critical gaze inward. In fact, if you could identify your own illusions, you would extinguish them in the process.

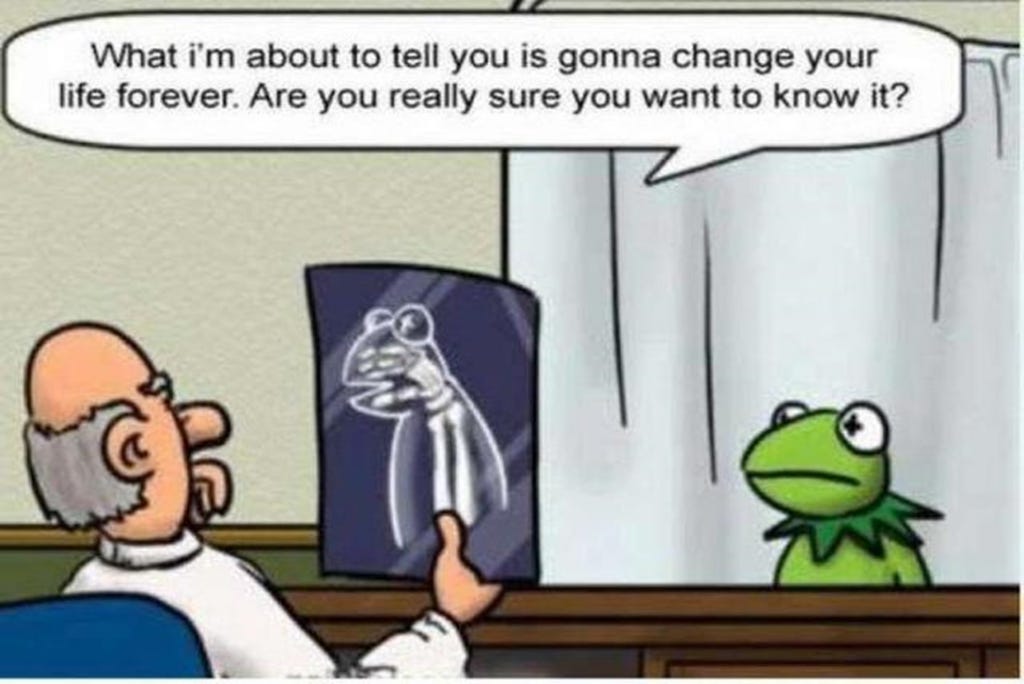

But if you cannot even tell which of your beliefs are false, how can you have any confidence that these beliefs are not dangerous and reckless delusions? The whole quest for beneficial misbeliefs, it seems, runs into a strange paradox, reminiscent of Joseph Heller’s absurdist novel Catch-22. The titular paradox of Heller’s story about US pilot John Yossarian during the Second World War goes as follows: any pilot is forced to fly dangerous combat missions unless he can prove he’s insane. Anyone in his right mind, of course, doesn’t want to risk his life in dangerous (and largely pointless) bombing campaigns; you’d have to be crazy! So anyone who volunteers to fly must be barking mad and is, for that very reason, exempt from flying missions. Conversely, a pilot who refuses to fly is, by this very act, showing evidence of sanity and hence was obliged to fly. In Heller’s words: “If he flew then he was crazy and didn’t have to; but if he didn’t want to he was sane and had to.”

The idea of voluntarily embracing some beneficial misbelief runs into a similar Catch-22. In order to find out which misbeliefs are beneficial and which are harmful, you have to investigate the attendant benefits and downsides. But once you’ve done that, you are no longer in a position to embrace your favorite misbelief, which means that you’re also unable to reap its benefits. An illusion will only make you happy if you’re fully under its spell, and blissfully unaware of it. On the other hand, if you are under the spell of some misbelief, you can no longer identify it and hence you are no longer in a position to tell whether your misbelief is beneficial or harmful. In order to do that, you would need to ‘step out of’ your illusory world, measure it up to reality, and check if you might ever get in trouble. But as soon as you’re even contemplating such a project, the spell will be broken. You have dispelled your own “little folly”.

Is there any way out of this doxastic Catch-22? One is known as paternalistic deception or “noble lies”: why not rely on someone else to keep an eye on you? For instance, your wise doctor prescribes a sham pill, persuading you that it will really cure your ailment so that you can benefit from the placebo effect, but all the while making sure that you don’t do anything foolish. But here again, the Catch-22 of beneficial misbelief threatens to rear its head indirectly: how do you initiate such a paternalistic relation to start with? It won’t do to tell your doctor beforehand: “OK, so from now on you have my permission to deceive me, if you believe it will make me feel better”. Such an agreement would defeat the purpose, undermining your confidence in whatever pill or treatment your doctor goes on to prescribe. And if you can’t know when you’re initiating a relationship of paternalistic deception, how can you be sure that your doctor has your own best interests at heart and will intervene if things get out of hand?

Another way out of the Catch-22 is to rely on the wisdom of evolution. What if some supernatural misbeliefs have been carefully ‘designed’ by natural selection for our benefit? Even if God doesn’t exist, it was necessary for evolution to invent him. The problem is that, even if you think such evolutionary accounts are plausible, natural selection (whether in the biological or cultural realm) does not really care about our happiness. According to scholars like Ara Norenzayan and Joe Henrich, belief in moralizing Big Gods has fostered pro-sociality and enabled large-scale human cooperation. That sounds beautiful and uplifting, but if you look a little closer, it turns out that it’s mostly the nasty, vengeful, punishing gods that bring pro-social benefits. The sticks works better than the carrot. Which raises the question: is belief in a wrathful god who will torture you in hell if you disobey him really good for you, even if we assume that it has helped to scale up human cooperation?

Moreover, in evolutionary accounts of religion there’s always a flipside to pro-sociality: being friendly to “us” also means being hostile and aggressive to “them”. There is a dark side to human pro-sociality that is often sanitized in popular accounts. Again, are misbeliefs that lead to intergroup conflict really good for us? As the philosopher Daniel Dennett likes to point out: cui bono? Who or what benefits from the evolved misbeliefs in question? In my academic work with the historian Steije Hofhuis, I have investigated the cultural evolution of ‘mind parasites’, systems of misbeliefs that subvert and harm the interests of their human hosts, such as the witch hunts in early modern Europe. Such beliefs may be cleverly designed and adapted, but only for their own survival and reproduction.

Yet another way out of the Catch-22 is to collapse the roles of deceiver and believer into one person – otherwise known as “self-deception”. You may not be able to flip a switch in your brain, but perhaps you can engage in more subtle forms of belief manipulation, gently nudging yourself towards beneficial illusions. Here’s what the 17th-century philosopher Blaise Pascal, in his famous Pensées, counseled to those who are desperate to believe in God but can’t find the switch in their brain: just go through the motions. Go to Mass on Sundays, genuflect, utter the prayers, sing along with the hymns. If you keep up with this for a while, Pascal counseled, belief will creep up on you.

But such a project of self-deception cannot tolerate too much in the way of self-reflection. You don’t just have to bring yourself to believe in God; you must also – and simultaneously – forget that this is in fact what you’re doing. As long as you remain aware that you’re engaging in a project of self-deception, I doubt that Pascal’s advice will achieve the desired effect. At the very least, there will always be some nagging doubt at the back of your mind about why you embarked on this whole church-going and hymn-singing project in the first place. And remember that you can only reap the benefits of your beneficial misbelief if you truly and sincerely believe it.

To see what it would take to really escape the doxastic Catch-22 , we need another thought experiment. Suppose I offer you a pill that has the following effect. If you swallow it in the evening and go to bed, you will wake up in the morning with a perfect belief in life after death (or whatever other pleasant belief you fancy). Of course the pill would also erase your memory of having swallowed the pill in the first place, or else – again – your belief wouldn’t stick (don’t leave the prescription lying around!). Presumably the pill would also create some internal justifications to shore up your belief: perhaps some bogus ‘evidence’ involving a near-death experience or just the inner sense of certainty that, yes, truly there is a life after this one. Would you swallow such a pill? I’ve asked this question to some colleagues and friends, and to my surprise some immediately said yes: please give us that pill and liberate us from this bleak and depressing reality!

For my part, I’m not so sure. Is such a life of voluntary delusion really what you should want? Even if you don’t have any objections against untruthfulness per se, how can you foresee all of the consequences and ramifications of your false belief in an afterlife, or in any other comforting fiction? If you were absolutely convinced that your personal death (or that of other people) doesn’t really matter, because there’s another life after this one, you might end up doing some crazy and reckless things. And if you genuinely believe that you are wonderfully talented, that your health is perfectly fine or that your spouse is not cheating on you (despite extensive evidence to the contrary), you may still be “mugged by reality” later on. Reality, as the writer Philip K. Dick argued, is that which, after you stop believing in it, doesn’t go away.

At the end of the day, would you not prefer to take the red pill and live by the truth, warts and all?

You focus on pleasant misbeliefs, rather than what (in my opinion) is much more common, distressing misbeliefs.

For instance, you write, "Some misbeliefs about the universe at large may also yield benefits to individual believers or to society. A prime candidate, of course, is religion," begging the question that it's belief in God--a belief held to by virtually all humans ever--that's the comforting/evolved misbelief, and that atheism is the correct view; that all those many billions of humans who experience transcendence and have faith are the ones who are deluded, and that the tiny minority of atheists are the only ones seeing things clearly. And that could be the case--because the vast majority of people believe something, that doesn't make it true. But it doesn't make it false, either, and if everyone except me thinks something is true, then the burden of proof is kind of on me, not them. Why does the more cynical, but equally nonfalsifiable, belief that there is no God get the benefit of the doubt?

I think this is tied to something you've written about before, for instance in "Seven Laws of Declinism." In that essay, you summarize reasons why bad news has more salience than good news. But what happens when you try to disabuse someone of their negative false beliefs? Person: "There are more cancer deaths now than ever!" Me: "Well, insofar as way more people live long enough to get cancer, maybe...but age-standardized cancer rates are so much lower now than they were in the past, and plus we're so much better at curing and preventing a lot of different cancers, so, overall, no, we're doing pretty great on the cancer front." How does that person respond--"yay, awesome, I'm glad I was wrong about cancer" or "that can't be true, what about [reason]/[anecdote]"? Given what you write about, I suspect your experience with this scenario is the same as mine, and you know that people are, uh, not happy to learn that the world is better off than they thought.

People don't delude themselves into accepting comforting beliefs; they delude themselves into thinking the world is worse than it is.

I'm sure I'm not an exception to this, and I believe a lot of horrible things to be true that are false. So yeah, feed me the red pill. I'll likely believe way more comforting things, and be more sanguine about the disquieting ones.

Really enjoyed this article. When I write or read about topics like this, I am often reminded of Richard Feynman's quote:

“You must not fool yourself--and you are the easiest person to fool.”

I like to start at the whole "know thyself" principle and build outward from there. Does not always work and is filled with blindspots, but I think if someone is really dedicated to knowing themselves, they can work to minimize those blindspots through reflection, being held accountable by others, and the constant pursuit of knowledge.

I think where most fall short is not continuing to learn and instead do their research via confirming what they already know. The confirmation bias is a huge issue today, especially when Google/GPT can just give you exactly what you ask it.